AI needs to stay in its lane; it’s as simple as that. When individuals turn to AI for creativity, ethics, and friendship, then we know that it’s gone too far. A comedian in New York recently found out that there is nothing ‘funny’ about AI. When she received some disheartening and scary news from her doctor, she felt helpless. However, she quickly found out that it was her doctor’s use of AI that led to a wrong diagnosis.

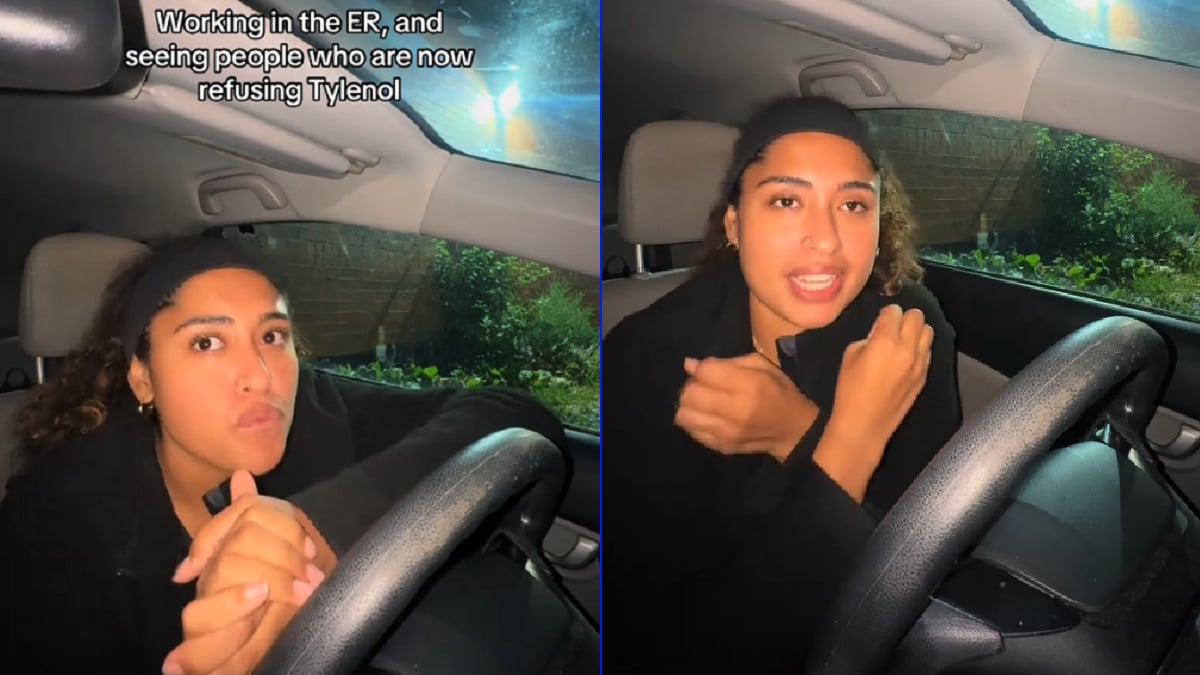

Meg (@megitchell on TikTok) shared a video captioned, “I HATE AI I HATE AI!!!!!!” It turns out, her doctor used AI to read her EKG that she received at her yearly checkup. She learned this after the EKG came back with some concerning results. Meg was quickly told by her doctor that she previously suffered from a heart attack that she didn’t even know about. This was concerning to her because how could she have a heart attack and never know it? What signs did she miss?

Meg lived in fear for a month wondering how she missed the signs as she awaited a cardiologist appointment. She even had fears that she could die. The cardiologist saw her and said, “You’re fine.” He told her that AI read her first EKG and the doctor signed off without even officially looking at it. Meg ended her video by saying, “F**k the doctor, basically” for putting her through that fear.

The question is: How would you feel if you found out your doctor used AI on a test that’s so important? And what would you do if you found out that they didn’t even care to give the test a once-over themselves? It would make me personally feel like they don’t care about my life and well-being. As one commenter pointed out on Meg’s video, “I don’t understand how using AI isn’t a HIPPA violation. The company is literally receiving private information without having a release of info document.”

Another commenter was more concerned with Meg’s livelihood in general and how this impacted her for so long. They pointed out, “This has to be medical malpractice. If it’s not it’s only because the laws haven’t caught up with the technology.” If I was in her situation and my doctor used AI to cause me all this stress, I wouldn’t be seeing that doctor anymore. It’s troublesome that this is what AI has come to.